Locking - Part 2 Traditional Locking

In the first article of this mini-series, we looked briefly at how UniVerse uses its lock table to ensure physical integrity of the database by blocking concurrent access to files at the group level. In this article we consider application locking as a means of ensuring logical integrity.

Why Locking?

The reason for locking is to ensure that all operations take place against a current copy of a record, in a way that guarantees that no updates are lost.

Sounds like time for a highly contrived example.

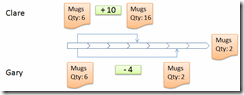

Mugs for Mugs has a stock control system. Clare receives a parcel from their suppliers with 10 mugs to add to the 6 already in store. At the same time Gary receives an order for 4 mugs.

Gary reads the stock record, notes that there are six mugs present and takes off 4, leaving 2. He finishes wrapping the parcel and saves the change. At the same time, Clare also reads the stock record and notes that there are six mugs present. She adds her ten mugs making 16, and saves the change before Gary finishes wrapping. Gary is a little slower than Clare (or dispatch is more difficult, he would say). Without locking, the system simply records what was committed last. The ten mugs have gone missing (fig. 1).

Fig. 1 Life Without Locking

So how should the system work? There are three possible options:

- Gary is not allowed to update the record until Clare has finished with it.

- One or other update is disallowed.

- Only the changes are recorded and they are applied to the record when it is saved.

These three scenarios demonstrate the main approaches to locking: pessimistic, optimistic and merging.

Traditional Locking Model

The traditional MultiValue locking model is a type of pessimistic locking that is based on intent. Before performing any operation that would lead to a change being made to a record, a developer is expected to request an update lock, which in turn confers an exclusive right to modify and commit that record. Once the developer has gained the update lock he or she is free to perform any modifications to the record and to write or delete the item — or to release the record lock should the update be abandoned (fig. 2).

Fig. 2 Now with added locking

Since it is normally expected that a record should be read prior to modification, the lock is most usually requested using a READU (Read for Update) statement:

READU Record FROM MYFILE, MyID ...

Under normal circumstances, the READU statement should either gain the record lock or wait patiently until the lock is available. The problem is, of course, that waiting patiently isn't necessarily the default action of most users who might simply assume that the session had hung or might, in fact, have better things to do than staring at a blank screen or whirling cursor. Some systems helpfully beep to show that the system is, in fact, still operating but that may not be sufficient to stop users closing their sessions in frustration (and DBAs having to clean up the resulting mess).

So a partial solution is to be found in the shape of the LOCKED clause. In addition to the usual THEN, ELSE and ON ERROR clauses, the READU statement has a LOCKED clause that fires if the record is already locked by another session. This allows developers to intervene or at least manage expectations (fig.3).

Fin = @False

Loop

ReadU MyRecord From MYFILE, MyID Locked

Crt @(0,22):'Hey, that lousy user ':Status():' has locked it'

Crt 'Trying again at':OConv(Time() + 1,"MTS")

Sleep 1

End Then

Fin = @True

End

Until Fin Do

Repeat

Fig. 3

It is worth noting that the LOCKED clause is the first clause on the statement, which is appropriate since the lock request is made first and the read action will not proceed if the lock cannot be taken. READU is not the only locking command: the RECORDLOCKU statement also exists on many platforms.

Releasing Locks

Locks are automatically released on a WRITE or DELETE action unless overridden by using the WRITEU or DELETEU statement. A frequent misunderstanding is that the WRITEU statement does not itself take a record lock if one does not exist: it only preserves an existing lock.

If the write or delete is abandoned, it is up to the developer to explicitly release the update lock. The RELEASE statement comes in three syntaxes with different levels of scope:

RELEASE fileVariable, itemId RELEASE fileVariable RELEASE

Good, bad, worse. Release without a record id is perhaps excusable for batch processes, but the global RELEASE is a disaster waiting to happen. That will release any and all locks held by that process — and not necessarily just from the one program, which is an issue when we look at client/server applications.

RELEASE is not the only example of lazy programming where locking is concerned. All locks held against a file variable are released when the file variable goes out of scope. Whilst useful, that can mask a bad habit in which the developer may frequently forget to explicitly release an item. File variables held in common only go out of scope when the common block is destroyed, which for named common is typically at the end of the session, so assuming closure as a means of releasing is bad programming.

Good Intentions

The strange thing about the traditional locking model is that update locking is intent based, not mandatory. You can read and write records with no locking and the database will not complain. It is a feature that new developers need to learn quickly, but one that will not be assisted by the software. A lack of discipline in taking out record locks is a problem when it comes to retrofitting transactional control into older systems. Consistent locking patterns is one of the benefits when it comes to using 4GLs and application runners, as these usually include hard baked locking.

An interesting approach was taken by the UniObjects middleware for UniVerse and UniData. Remembering that this was targeted at client side developers working away from the database — who may not be MultiValue programmers — rather than requiring the developer to explicitly take out and release record locks, UniObjects offers the option to create a locking strategy at a session or individual file level. The strategy dictates when record locks should be taken and under what circumstances they should be released, which is a brave attempt to simplify the model whilst making it consistent.

Shared Read Locks

So far we have only considered locking from the point of view of updates, but there is another level of locking available. Whilst not used very often, a shared read lock, created using a READL or RECORDLOCKL statement, prevents other processes from gaining an update lock but does not block other processes from also gaining a shared read lock. Similarly, a shared file lock prevents another process from getting an exclusive file lock. Shared locks are therefore used to ensure the consistency of data as it is being presented, guaranteeing that the view is an accurate snapshot in time. It's a way of saying — don't mess with my data until I've finished looking at it!

Transactions and Isolation

The traditional locking model was built in an age before true transaction boundaries were available under the MultiValue model. Systems assumed that any writes would complete and therefore the locks could be discarded as soon as a write operation had returned.

With transaction handling, locks need to take center stage. You start a long transaction, possibly involving a large number of records that need updating. Just as for the individual update above, in order to guarantee transactional integrity it is essential that no other changes from other processes can intervene whilst the transaction is running. This means that you need to acquire locks for everything modified in the transaction until the transaction has either been committed or rolled back. This can, of course, stretch the lifetime of a lock very much further than that required for non-transactional processing. Any missing locks could allow another process to change the data during the transaction phase, thereby compromising data integrity. Where there are nested transactions, locks in the child transaction should not be released until the parent transaction has completed since the child will not be committed until then.

It is for this reason that UniVerse enforces transactional integrity through the twin concepts of isolation levels and isolation modes. An isolation mode, in UniVerse terms, is implemented as a set of locking prerequisites that must be met in order for processing to continue at a given isolation level. Isolation levels are one of the inheritances from the SQL model that dictate how safe and repeatable a system should be to meet transactional constraints. In the lowest of these, NO.ISOLATION, there are no prerequisites and the database acts as a regular MultiValue system. As you go up through the tree, UniVerse becomes more restrictive in its locking requirements in order to guarantee integrity of operations.

In practice this means that for a READ COMMITTED isolation level — the recommended level for busy sites — any reads should be protected by at least a shared read lock and any writes by at least a record update lock. The words at "least" here are significant: an update lock trumps a read lock, and a file lock trumps a record lock. A file lock requests exclusive update access to a database file and takes precedence over all record locks within the file. A file lock is requested using the FILELOCK statement and released using the FILEUNLOCK statement.

Lock Promotion

There are some circumstances where UniVerse will insist on an isolation mode even if one has not been explicitly set. Triggers are one such example — because a trigger could be part of a transaction, UniVerse requires that any updates performed by a trigger must be protected by an update lock. If not, the trigger will simply fail and the update will abort.

Another such situation regards SQL-oriented operations. A SQL UPDATE runs as a single transaction so the database needs to ensure that all candidates are locked, which could involve many thousands of record locks. Whilst effective locking is critical for logical consistency and mandated for some isolation modes, taking out huge numbers of record locks can swamp and eventually stop the system.

So for some bulk operations, notably large SQL UPDATE statements, UniVerse will automatically consolidate record locks to a single file lock once a configurable threshold has been reached, to avoid the danger of filling the lock table at the expense of the greater risk of contention.

Non-Transactional Bulk Updates

When running regular MultiValue processing, even large transactions are typically relatively limited in scope, possibly affecting a few dozens or hundreds of records. If you run the update through Basic, then you have options such as using soft locks throughout and ensuring that all reads and writes go through your own subroutines (ignoring PQN PROC and other such ugly mechanisms for updating), locking some identifier known to the processing such as a batch header record, or taking a file lock. If the process is truly wide ranging you could also use the old task synchronisation locks that seem to have been largely forgotten now using the LOCK n statement to ensure that only a single instance could run the update, as a belt and braces procedure to further limit the impact.

The traditional locking model served MultiValue developers well for many years, but in the next article we will look at where the model breaks down.