Developing for Google Glass Part 2

Our plan had three parts:

- Hook up Glass to an Inventory Database,

- Use the scanner capabilities to read product and box labels,

- Create an X-Ray effect, where looking at the labels pulls up information about the inventory.

In Part 1, we covered my attempt to build a pure Glass application for inventory control. While Glass has all the parts I needed, it lacked the glue to make them work together. In Part 2 we will see how our plan can be done as an Android App and still work using Glass. The key is the true-but-false statement that Glass has a built-in full instance of Android.

It is true because it does have Android inside. It is false because there is no touch-screen equivalent. With Glass you have "tap" for select, "swipe forward" for scroll forward, "swipe back" for scroll back, and "swipe down" for cancel. Additionally, you have voice commands for such acts as "take a picture" or "google" a word or phrase. Much of the usability of Android is built upon the touch interface. Losing that bit of magic changes a lot. We can create our application in Android if and only if we embrace the limits.

Inventory Database

I called Ladybridge and arranged for a copy of QM for my test. The problem was that my ISP doesn't allow command line access. Working with Martin, we developed a web install process that allowed me to install QM without needing the command line. Once I had a MultiValue environment, I loaded a small amount of data. Because QM, like most MultiValue databases, has the ability to be exposed as a web service, I knew that I'd have no trouble using it on the backend.

The idea was to set up a pair of subroutines. One, FINDBOX, to look up the inventory based on the barcode which was scanned. The other, MAIN, to act as a gatekeeper so that I could add other features in the future (fig 2).

subroutine MAIN(RESULT,CMD,USER,OPTIONS) call FINDBOX(RESULT,CMD,USER,OPTIONS) return subroutine FINDBOX(RESULT,CMD,USER,OPTIONS) * CMD layout: find 9781906471859 INV.ID = oconv(CMD,'G1 1') read INV.REC from INV.FILE, INV.ID else INV.REC = INV.ID : ' not found.' END convert @AM to char(13) in INV.REC RESULT = '<div class="data">' : INV.REC : '</div>' return

Fig. 2 Our Code

Scanner

As I mentioned in the last installment, the Scanner in Glass wasn't going to do what I needed it to do. After consulting with a few Glass gurus, it was determined that the best course would be to send a picture and let the back end do the decode. As clever as our various MultiValue implementations are, I don't know of one which will tease a barcode out of a picture natively. We needed a piece to fit between QM and Glass.

Zxing (pronounced Zebra Crossing) < https://code.google.com/p/zxing/ > suited the need. It is a backend application for turing a picture of a barcode into the code numbers. It supports all of these: UPC-A, UPC-E, EAN-8, EAN-13, Code 39, Code 93, Code 128, ITF, Codabar, RSS-14 (all variants), RSS Expanded (most variants), QR Code, Data Matrix, Aztec ('beta' quality), and PDF 417 ('alpha' quality).

With zxing in place, I would be able to meet my spec.

X-Ray

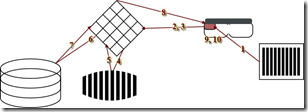

The return trip was the next challenge. Just like with QM and zxing, the solution is the web. Here's my new roadmap (fig. 1):

Fig. 1

- Glass: User scrolls to my app and taps to activate it.

- Glass App: App allows use to tap to take a picture and send it over the web.

- Backend Web (via PHP): receives the picture.

- Backend Web: calls zxing.

- Zxing converts the picture and returns a number.

- Backend Web (via PHP): take the returned number and calls the QM subroutine.

- QM: looks up the number and returns the contents.

- Backend Web (via PHP): takes the response from QM and sends it back to the Glass App.

- Glass App: Like any other browser, it waits for the response from the server and then displays the results.

- Glass App: Since we see the result inside the App, we can let the app take the next picture simply by accepting the next tap.

Wrap Up

I've yet to finish this masterpiece. Life continually intervenes. I now have every piece. All I lack is time. Since this approach will work on any Android device, maybe one of you will beat me to it and send me a finished copy of the app. There's no reason it couldn't be an iOS app, for that matter. We have reduced the front to: (1) send a picture and user ID, (2) display the response, (3) repeat or quit. This allows us to implement the app on nearly any hardware.

I'll continue to plot and plan with my Glass. Perhaps there's another article in the offing.